Parameter Estimation : Introduction

- In order to estimate the parameters randomly from a given sample distribution data, the technique of parameter estimation is used.

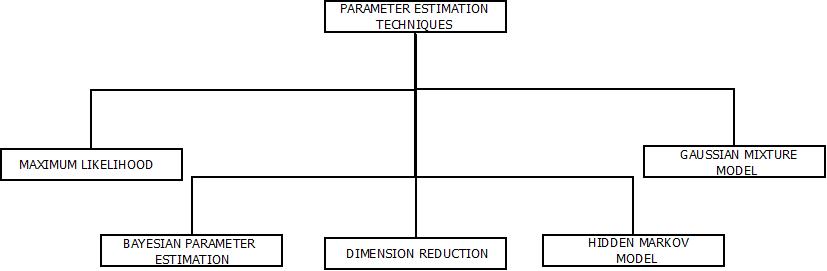

- To achieve this, a number a estimation techniques are available and listed below.

Parameter Estimation Techniques

- To implement the estimation process, certain techniques are available including Dimension Reduction, Gaussian Mixture Model etc.

- Each of them has been explained in the further chapters of this tutorial.

Parameter Estimation Techniques

-

Parameter Estimation : Maximum likelihood Estimation

- Estimation model consists of a number of parameters. So, in order to calculate or estimate the parameters of the model, the concept of Maximum Likelihood is used.

- Whenever the probability density functions of a sample is unknown, they can be calculated by taking the parameters inside sample as quantities having unknown but fixed values.

- In simple words, consider we want to calculate the height of a number of boys in a school. But, it will be a time consuming process to measure the height of all the boys. So, the unknown mean and unknown variance of the heights being distributed normally, by maximum likelihood estimation we can calculate the mean and variance by only measuring the height of a small group of boys from the total sample.

-

Parameter Estimation : Bayesian Parameters Estimation

- “Parameters” in Bayesian Parameters Estimation are the random variable which comprises of known Priori Distribution.

- The major objective of Bayesian Parameters Estimation is to evaluate how varying parameter affect density estimation.

- The aim is to estimate the posterior density P(Θ/x).

- The above expression generates the final density P(x/X) by integrating the parameters.

Bayesian Parameter Estimation

-

Parameter Estimation : Expectation Maximization(EM)

- Expectation maximization the process that is used for clustering the data sample.

- EM for a given data, has the ability to predict feature values for each class on the basis of classification of examples by learning the theory that specifies it.

- It works on the concept of, starting with the random theory and randomly classified data along with the execution of below mentioned steps.

- Step-1(“E”) : In this step, Classification of current data using the theory that is currently being used is done.

- Step-2(“M”) : In this step, With the help of current classification of data, theory for that is generated.

- Thus EM means, Expected classification for each sample is generated used step-1 and theory is generated using step-2.